Greetings friends, a few years ago I told you all the advantages of using Microsoft’s ReFS file system to accompany it to our Veeam as a Backup Repository where the synthetic full is generated every week.

Greetings friends, a few years ago I told you all the advantages of using Microsoft’s ReFS file system to accompany it to our Veeam as a Backup Repository where the synthetic full is generated every week.

Veeam has been recommending for a long time to use this technology to make the disk operations that are made when a synthetic is generated really fast, so fast that Veeam marks it in its GUI as [fast clone].

So far, everything is wonderful, and I’m sure you are already using it in your Datacenter, but one of the new features that Veeam Backup & Replication includes in v10, is the possibility of using Linux-based repositories formatted in XFS with the Reflink flag, which is basically the same as ReFS.

Officially supported in Ubuntu 18.04 LTS, experimental in the rest of the distributions.

XFS (Reflink) explained in conjunction with Veeam Backup & Replication

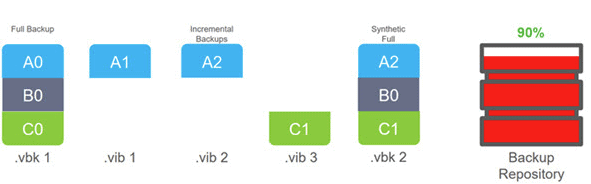

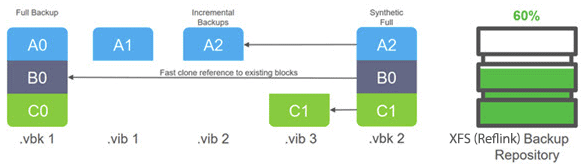

If we want to take a closer look at how XFS works with Reflink enabled. For example, let’s think of a case with NTFS, where we have the typical full copy of each week, the incremental ones and the synthetic full backup, this would look like this in NTFS:

If we use XFS with Reflink enabled, we can see that the full synthetic copy instead of having to move all the incremental blocks to produce the new synthetic full, what it does is use the Reflink attribute of XFS to use the references to them to get a fast clone, which makes the copy much faster, and takes up less disk space.

This way, we can safely say that using XFS repositories with Reflink enabled, will allow us to reduce the size of our synthetic copies, and get them to go faster.

How to enable XFS (Reflink) in Ubuntu 18.04 LTS

First of all, mention that the reflink attribute of XFS is only found in modern kernel versions, for example Ubuntu 16.04 or earlier is not available, so in this case I recommend you to use Ubuntu 18.04 or any other modern system.

Operating System Installation

We will rely on the step-by-step installation of Ubuntu Server 18.04 LTS the following video:

Once we have everything up and running, we’ll give this Linux server an extra disk.

Command to Enable XFS (reflink) in Ubuntu 18.04 LTS

Once we have added to the disk, we can check that everything is ok if we launch the command lshw -class disk -class storage -short, which will show us something similar to this, watch out for my sdb which has 500GB

H/W path Device Class Description ======================================================= /0/100/7.1 storage 82371AB/EB/MB PIIX4 IDE /0/100/11/1 storage SATA AHCI controller /0/100/15/0 scsi2 storage PVSCSI SCSI Controller /0/100/15/0/0.0.0 /dev/sda disk 21GB Virtual disk /0/100/15/0/0.1.0 /dev/sdb disk 536GB Virtual disk /0/46 scsi3 storage /0/46/0.0.0 /dev/cdrom disk VMware SATA CD00

We will now format this entire disk with XFS format, and with the parameters that Veeam needs to execute the fast-clone, which are of course reflink and the activated CRC:

mkfs.xfs -b size=4096 -m reflink=1,crc=1 /dev/sdb

The result of the command has to be something similar to this, where we are shown that the CRC is activated, just like reflink:

meta-data=/dev/sdb isize=512 agcount=4, agsize=32768000 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=0, rmapbt=0, reflink=1

data = bsize=4096 blocks=131072000, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=64000, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

All that’s left is to mount this new album in a folder, which we have to create:

mkdir /backups mount /dev/sdb /backups

If we do a simple df -hT we can see that everything is correct, as you see, for my Linux Operating System I use EXT4 and for my repository I use XFS (500GB) with the relevant attributes:

df -hT Filesystem Type Size Used Avail Use% Mounted on udev devtmpfs 1.9G 0 1.9G 0% /dev tmpfs tmpfs 395M 1.1M 394M 1% /run /dev/mapper/ubuntu--vg-ubuntu--lv ext4 19G 4.4G 14G 25% / tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm tmpfs tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup /dev/sda2 ext4 976M 145M 765M 16% /boot tmpfs tmpfs 395M 0 395M 0% /run/user/1000 /dev/sdb xfs 500G 3.6G 497G 1% /backups

Note: Obviously I recommend you to add this new album to your fstab so that it is always mounted, we are going to know which is the UUID of our album, for it we launch the command blkid /dev/YOURDISK:

blkid /dev/sdb /dev/sdb: UUID="fd4c0586-2d7b-4b11-87b4-721d8529875b" TYPE="xfs"

Now we will edit the file /etc/fstab in the following way, easier than flirting with vi or nano, although you could also, you see that I put my UUID and my route where I want it to be mounted:

echo 'UUID=fd4c0586-2d7b-4b11-87b4-721d8529875b /backups xfs defaults 1 1' >> /etc/fstab

We’ve got everything! Let’s throw some cool tests.

Check XFS (Reflink) on Ubuntu 18.04 LTS

There are several ways we can check how this technology works, in case the diagram above wasn’t clear to you, let’s see them.

I will create a silly file with 10GB of disk space, it can be less, change the 10240 by the number you want, in my case it took a while:

dd if=/dev/urandom of=test bs=1M count=10240 10240+0 records in 10240+0 records out 10737418240 bytes (11 GB, 10 GiB) copied, 75.7913 s, 142 MB/s

If I do a df -h now, I see that I have 14GB occupied 4GB of metadata and the new 10GB I created:

df -h . Filesystem Size Used Avail Use% Mounted on /dev/sdb 500G 14G 487G 3% /backups

I’m going to launch a copy of this same file using the reflink parameter, it will look like this with its result:

cp -v --reflink=always test test-one 'test' -> 'test-one

An ls -hsl tells me, of course, that I now have 20GB of disk space consumed, 10GB for each file:

ls -hsl total 20G 10G -rw-r--r-- 1 root root 10G Mar 18 21:20 test 10G -rw-r--r-- 1 root root 10G Mar 18 21:25 test-one

However, the command to see the disk space consumption has not grown a bit:

df -h . Filesystem Size Used Avail Use% Mounted on /dev/sdb 500G 14G 487G 3% /backups

Everything fantastic so far, I hope. Let’s see much more in detail what’s going on, with the command filefrag -v FILEONE FILETWO we can see the following:

filefrag -v test test-one Filesystem type is: 58465342 File size of test is 10737418240 (2621440 blocks of 4096 bytes) ext: logical_offset: physical_offset: length: expected: flags: 0: 0.. 2097135: 20.. 2097155: 2097136: shared 1: 2097136.. 2621439: 2097156.. 2621459: 524304: last,shared,eof test: 1 extent found File size of test-one is 10737418240 (2621440 blocks of 4096 bytes) ext: logical_offset: physical_offset: length: expected: flags: 0: 0.. 2097135: 20.. 2097155: 2097136: shared 1: 2097136.. 2621439: 2097156.. 2621459: 524304: last,shared,eof test-one: 1 extent found

What’s all this? Well, we can see that they have the same extent, and also that they are in the same physical position inside the disk, which is basically what I was telling you in the diagram above, which doesn’t write anything new, they just reference and use the same block.

Creating a Veeam Repository for XFS (Reflink)

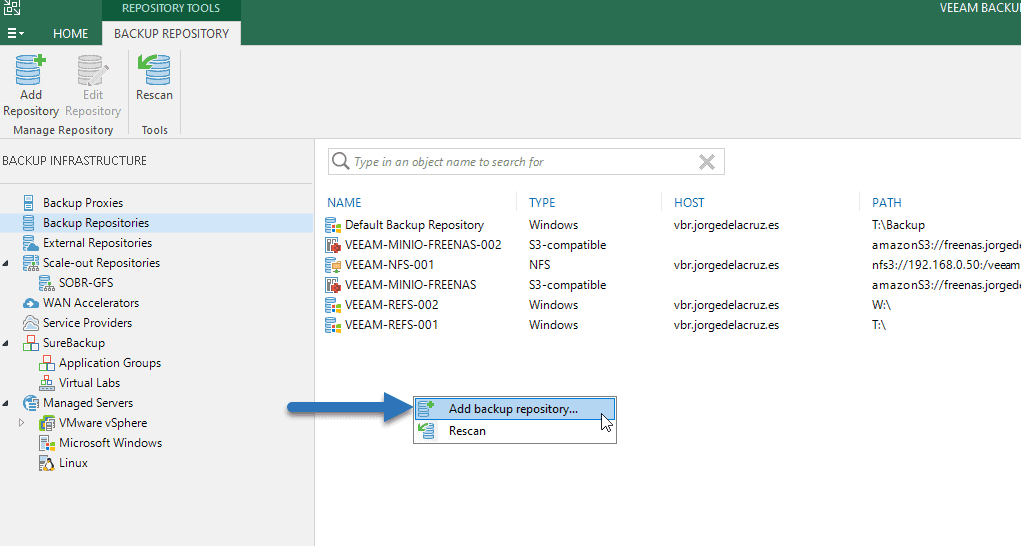

Once we have our Ubuntu 18.04 with XFS (Reflink) perfectly configured as I mentioned before, it’s time to add the Repository in the traditional way, in Backup Infrastructure – Add Repository

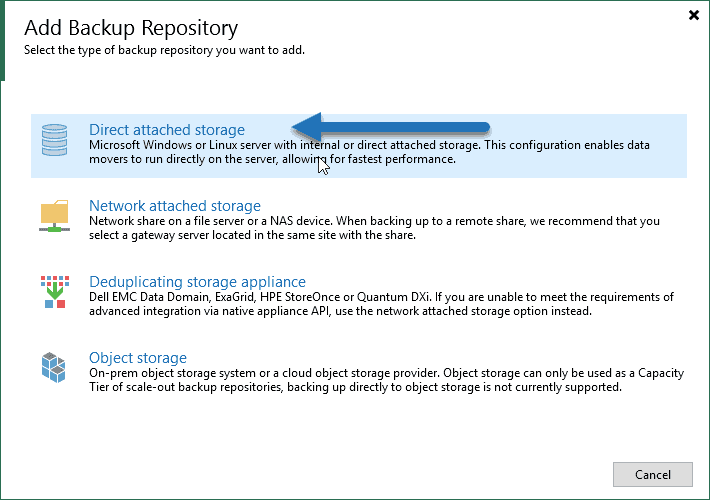

We will select that the Repository is of type Direct attached storage:

And we will select Linux now in this section:  We will select the machine with Ubuntu 18.04 LTS, with its credentials and we can even click on populate to see the available volumes:

We will select the machine with Ubuntu 18.04 LTS, with its credentials and we can even click on populate to see the available volumes:

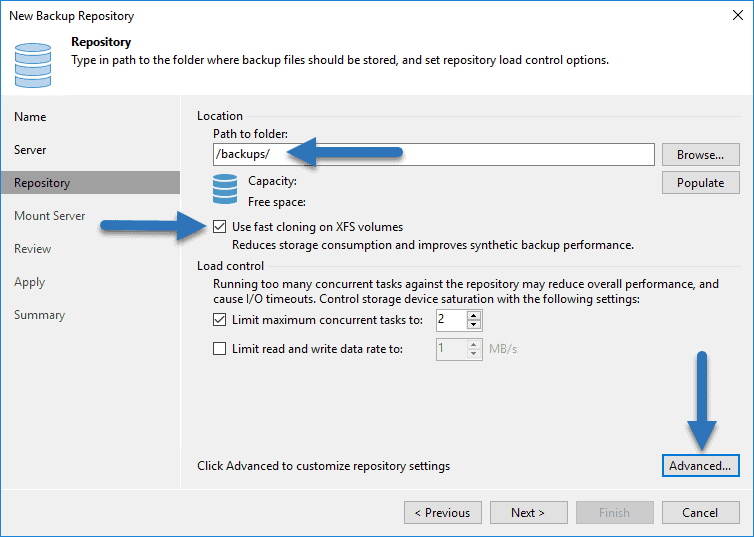

We will select now the folder inside the volume where we want to save the backups, also, we will be able to see an additional check called “Use fast cloning on XFS volumes” we can click on Advanced to see that we don’t have anything marked:

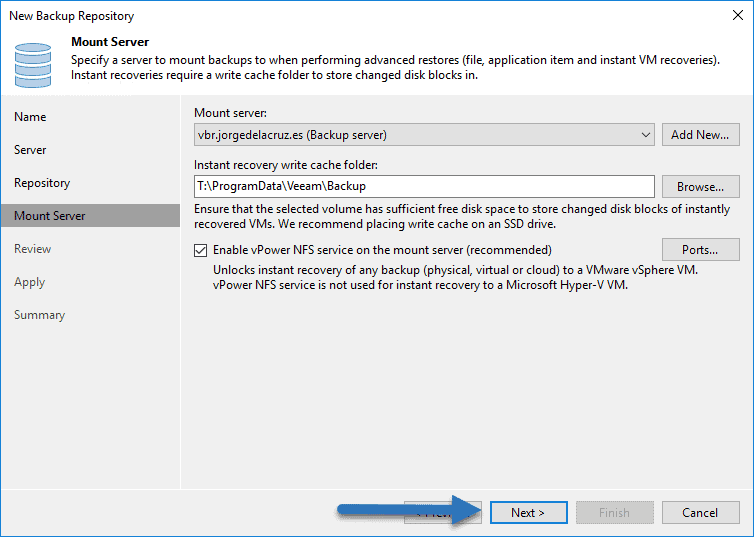

Finally, we will select the Mount Server and the path for vPowerNFS:

Configuring the Veeam Backup Jobs correctly for Veeam Repositories with XFS format (Reflink)

Once we have the new Veeam Backup Repository ready and configured following the previous steps, it’s time to create copy jobs to this new repository, let’s go.

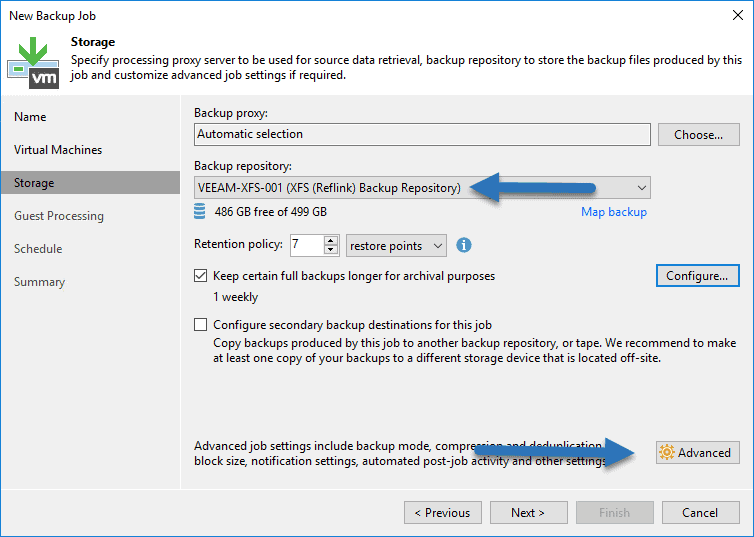

I have to mention that the recommended way to use XFS (Reflink) repositories with Veeam is using synthetic backups, so we will go to the Storage tab in this copy job, we will select the Backup Repository, in my case I save a complete string of jobs (a full and its synthetic incrementals) and the rest of the archiving I do Backup Copy. We will click on Advanced:

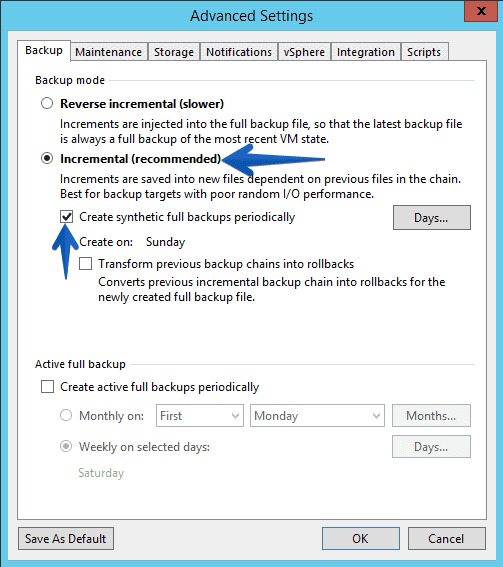

We will select the option Incremental, the checkbox of create synthetic full backup, and the day we want, usually because it takes time and resources is better to do it during the weekend:

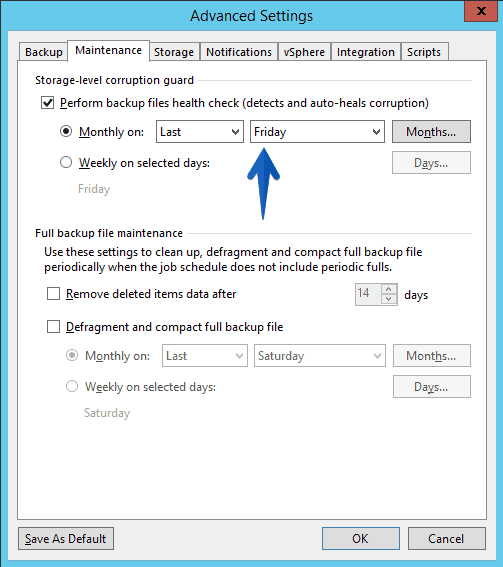

Veeam includes a task to protect tasks against data corruption, especially useful in Repositories with XFS (Reflink), although XFS already has its own technology for the same, I recommend enabling it in the backup job, which does not hurt either:

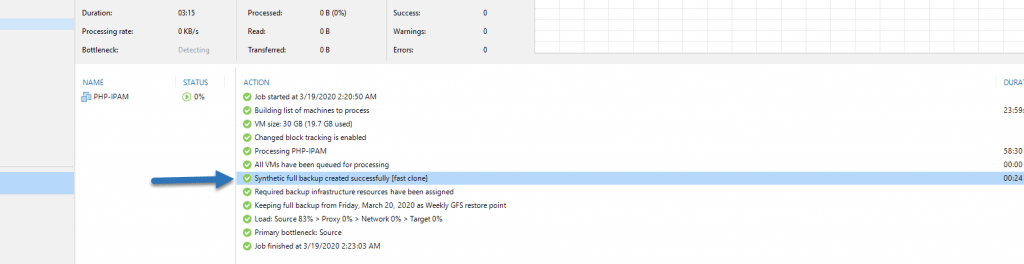

Once we launch the copies, when we get to the day when the synthetic full backup is done, we will be able to see if everything is correct that Veeam is making use of XFS (reflink) fast clone technology, and that the time to complete the operation is only 17 seconds in my case, in your cases, it will vary, but for sure it is much higher performance than if you do it over NTFS or another format that is not using fast-clone:

Testing the theory in our Veeam Backup Repository on XFS (Reflink)

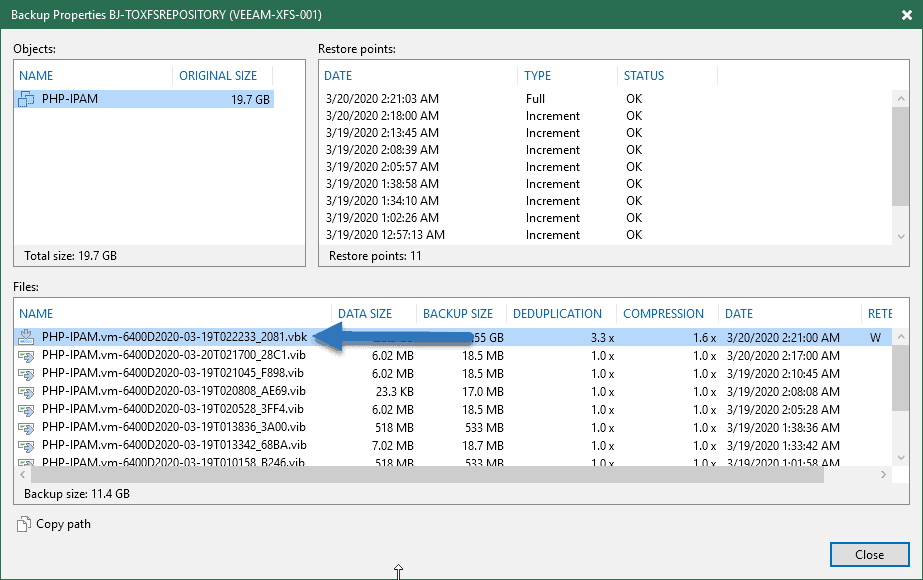

We’ve seen a lot of theory in this post, now that we have a full synthetic copy, we can see the name in Veeam:

Let’s check how many of the blocks in this file are shared and come from the incremental, all of which say shared:

filefrag -v PHP-IPAM.vm-6400D2020-03-19T022233_2081.vbk Filesystem type is: 58465342 File size of PHP-IPAM.vm-6400D2020-03-19T022233_2081.vbk is 5964161024 (1456094 blocks of 4096 bytes) ext: logical_offset: physical_offset: length: expected: flags: 0: 0.. 0: 3872540.. 3872540: 1: 1: 1.. 4360: 4161268.. 4165627: 4360: 3872541: 2: 4361.. 4362: 4161257.. 4161258: 2: 4165628: 3: 4363.. 4363: 4161255.. 4161255: 1: 4161259: 4: 4364.. 12539: 2625827.. 2634002: 8176: 4161256: shared 5: 12540.. 53494: 2634005.. 2674959: 40955: 2634003: shared 6: 53495.. 53499: 4005853.. 4005857: 5: 2674960: shared 7: 53500.. 53511: 2674965.. 2674976: 12: 4005858: shared 8: 53512.. 53528: 4005858.. 4005874: 17: 2674977: shared 9: 53529.. 53582: 2674994.. 2675047: 54: 4005875: shared 10: 53583.. 53621: 4156485.. 4156523: 39: 2675048: shared 11: 53622.. 96719: 2675087.. 2718184: 43098: 4156524: shared 12: 96720.. 97751: 3725849.. 3726880: 1032: 2718185: shared 13: 97752.. 99108: 2718871.. 2720227: 1357: 3726881: shared 14: 99109.. 100140: 3726881.. 3727912: 1032: 2720228: shared 15: 100141.. 102872: 2720883.. 2723614: 2732: 3727913: shared 16: 102873.. 103025: 4156524.. 4156676: 153: 2723615: shared 17: 103026.. 111903: 2723768.. 2732645: 8878: 4156677: shared 18: 111904.. 112558: 2720228.. 2720882: 655: 2732646: shared 19: 112559.. 115238: 2732646.. 2735325: 2680: 2720883: shared 20: 115239.. 131750: 3728066.. 3744577: 16512: 2735326: shared 21: 131751.. 137221: 2735326.. 2740796: 5471: 3744578: shared 22: 137222.. 141349: 3744578.. 3748705: 4128: 2740797: shared 23: 141350.. 144592: 2741147.. 2744389: 3243: 3748706: shared 24: 144593.. 144942: 2740797.. 2741146: 350: 2744390: shared 25: 144943.. 145374: 2744390.. 2744821: 432: 2741147: shared 26: 145375.. 160036: 3748706.. 3763367: 14662: 2744822: shared 27: 160037.. 164703: 2752899.. 2757565: 4667: 3763368: shared 28: 164704.. 166767: 3763368.. 3765431: 2064: 2757566: shared 29: 166768.. 167812: 2757566.. 2758610: 1045: 3765432: shared 30: 167813.. 171940: 3765432.. 3769559: 4128: 2758611: shared 31: 171941.. 176115: 2758611.. 2762785: 4175: 3769560: shared 32: 176116.. 176614: 2751026.. 2751524: 499: 2762786: shared 33: 176615.. 201382: 3769560.. 3794327: 24768: 2751525: shared 34: 201383.. 202002: 2767922.. 2768541: 620: 3794328: shared 35: 202003.. 208194: 3794328.. 3800519: 6192: 2768542: shared 36: 208195.. 211217: 2768542.. 2771564: 3023: 3800520: shared 37: 211218.. 217409: 3800520.. 3806711: 6192: 2771565: shared 38: 217410.. 218665: 2771703.. 2772958: 1256: 3806712: shared 39: 218666.. 249625: 3806712.. 3837671: 30960: 2772959: shared 40: 249626.. 253980: 2774134.. 2778488: 4355: 3837672: shared 41: 253981.. 256044: 3837672.. 3839735: 2064: 2778489: shared 42: 256045.. 256769: 2778489.. 2779213: 725: 3839736: shared 43: 256770.. 257955: 2766598.. 2767783: 1186: 2779214: shared 44: 257956.. 261846: 2745036.. 2748926: 3891: 2767784: shared 45: 261847.. 262028: 2762786.. 2762967: 182: 2748927: shared 46: 262029.. 262532: 2718367.. 2718870: 504: 2762968: shared 47: 262533.. 263384: 2750174.. 2751025: 852: 2718871: shared 48: 263385.. 264293: 2779214.. 2780122: 909: 2751026: shared 49: 264294.. 266357: 3839736.. 3841799: 2064: 2780123: shared 50: 266358.. 266481: 2780123.. 2780246: 124: 3841800: shared 51: 266482.. 268545: 3841800.. 3843863: 2064: 2780247: shared 52: 268546.. 276699: 3864384.. 3872537: 8154: 3843864: shared 53: 276700.. 278865: 3872545.. 3874710: 2166: 3872538: shared 54: 278866.. 279460: 2780515.. 2781109: 595: 3874711: shared 55: 279461.. 366148: 3874711.. 3961398: 86688: 2781110: shared 56: 366149.. 366150: 4005875.. 4005876: 2: 3961399: shared 57: 366151.. 368373: 2782063.. 2784285: 2223: 4005877: shared 58: 368374.. 370437: 3961401.. 3963464: 2064: 2784286: shared 59: 370438.. 371546: 2748927.. 2750035: 1109: 3963465: shared 60: 371547.. 371682: 2784286.. 2784421: 136: 2750036: shared 61: 371683.. 404706: 3963465.. 3996488: 33024: 2784422: shared 62: 404707.. 481074: 4005877.. 4082244: 76368: 3996489: shared 63: 481075.. 481921: 2801233.. 2802079: 847: 4082245: shared 64: 481922.. 535585: 4082245.. 4135908: 53664: 2802080: shared 65: 535586.. 537551: 2819759.. 2821724: 1966: 4135909: shared 66: 537552.. 539615: 4135909.. 4137972: 2064: 2821725: shared 67: 539616.. 540700: 2764265.. 2765349: 1085: 4137973: shared 68: 540701.. 540882: 2821725.. 2821906: 182: 2765350: shared 69: 540883.. 544436: 2810370.. 2813923: 3554: 2821907: shared 70: 544437.. 545545: 2765350.. 2766458: 1109: 2813924: shared 71: 545546.. 545690: 2821907.. 2822051: 145: 2766459: shared 72: 545691.. 548072: 2813924.. 2816305: 2382: 2822052: shared 73: 548073.. 664389: 2822052.. 2938368: 116317: 2816306: shared 74: 664390.. 664413: 3858312.. 3858335: 24: 2938369: shared 75: 664414.. 714847: 2938393.. 2988826: 50434: 3858336: shared 76: 714848.. 715002: 4156677.. 4156831: 155: 2988827: shared 77: 715003.. 717220: 2988982.. 2991199: 2218: 4156832: shared 78: 717221.. 717379: 4137976.. 4138134: 159: 2991200: shared 79: 717380.. 721183: 2991358.. 2995161: 3804: 4138135: shared 80: 721184.. 725310: 2806061.. 2810187: 4127: 2995162: shared 81: 725311.. 727374: 2817695.. 2819758: 2064: 2810188: shared 82: 727375.. 738265: 2995162.. 3006052: 10891: 2819759: shared 83: 738266.. 739639: 2751525.. 2752898: 1374: 3006053: shared 84: 739640.. 740936: 2762968.. 2764264: 1297: 2752899: shared 85: 740937.. 744716: 2793053.. 2796832: 3780: 2764265: shared 86: 744717.. 749526: 3006053.. 3010862: 4810: 2796833: shared 87: 749527.. 749666: 2784550.. 2784689: 140: 3010863: shared 88: 749667.. 806536: 3010863.. 3067732: 56870: 2784690: shared 89: 806537.. 806718: 2718185.. 2718366: 182: 3067733: shared 90: 806719.. 835604: 3067733.. 3096618: 28886: 2718367: shared 91: 835605.. 835786: 2781745.. 2781926: 182: 3096619: shared 92: 835787.. 873236: 3096619.. 3134068: 37450: 2781927: shared 93: 873237.. 873265: 3858491.. 3858519: 29: 3134069: shared 94: 873266.. 873290: 3134098.. 3134122: 25: 3858520: shared 95: 873291.. 873313: 3858520.. 3858542: 23: 3134123: shared 96: 873314.. 936107: 3134146.. 3196939: 62794: 3858543: shared 97: 936108.. 936246: 2766459.. 2766597: 139: 3196940: shared 98: 936247.. 955604: 3196940.. 3216297: 19358: 2766598: shared 99: 955605.. 955701: 3858543.. 3858639: 97: 3216298: shared 100: 955702.. 1049838: 3216395.. 3310531: 94137: 3858640: shared 101: 1049839.. 1049975: 2785104.. 2785240: 137: 3310532: shared 102: 1049976.. 1067396: 3310532.. 3327952: 17421: 2785241: shared 103: 1067397.. 1067534: 2750036.. 2750173: 138: 3327953: shared 104: 1067535.. 1076470: 3327953.. 3336888: 8936: 2750174: shared 105: 1076471.. 1076610: 2773855.. 2773994: 140: 3336889: shared 106: 1076611.. 1078559: 3336889.. 3338837: 1949: 2773995: shared 107: 1078560.. 1078579: 3858640.. 3858659: 20: 3338838: shared 108: 1078580.. 1078815: 3338858.. 3339093: 236: 3858660: shared 109: 1078816.. 1078840: 3858660.. 3858684: 25: 3339094: shared 110: 1078841.. 1078864: 4001412.. 4001435: 24: 3858685: shared 111: 1078865.. 1078878: 4156832.. 4156845: 14: 4001436: shared 112: 1078879.. 1093138: 3339163.. 3353422: 14260: 4156846: shared 113: 1093139.. 1093165: 3858709.. 3858735: 27: 3353423: shared 114: 1093166.. 1120484: 3353450.. 3380768: 27319: 3858736: shared 115: 1120485.. 1120509: 4156846.. 4156870: 25: 3380769: shared 116: 1120510.. 1120537: 3380794.. 3380821: 28: 4156871: shared 117: 1120538.. 1120568: 3858761.. 3858791: 31: 3380822: shared 118: 1120569.. 1120862: 3380853.. 3381146: 294: 3858792: shared 119: 1120863.. 1120936: 3858792.. 3858865: 74: 3381147: shared 120: 1120937.. 1120977: 3381221.. 3381261: 41: 3858866: shared 121: 1120978.. 1121065: 3858866.. 3858953: 88: 3381262: shared 122: 1121066.. 1308961: 3381350.. 3569245: 187896: 3858954: shared 123: 1308962.. 1308983: 3858954.. 3858975: 22: 3569246: shared 124: 1308984.. 1431939: 3569268.. 3692223: 122956: 3858976: shared 125: 1431940.. 1431974: 3858976.. 3859010: 35: 3692224: shared 126: 1431975.. 1441825: 3692259.. 3702109: 9851: 3859011: shared 127: 1441826.. 1441859: 3859011.. 3859044: 34: 3702110: shared 128: 1441860.. 1451809: 3702144.. 3712093: 9950: 3859045: shared 129: 1451810.. 1452753: 3859045.. 3859988: 944: 3712094: shared 130: 1452754.. 1456089: 3713308.. 3716643: 3336: 3859989: shared 131: 1456090.. 1456090: 4161259.. 4161259: 1: 3716644: 132: 1456091.. 1456093: 4165628.. 4165630: 3: 4161260: last,eof PHP-IPAM.vm-6400D2020-03-19T022233_2081.vbk: 133 extents found root@veeam-xfs-001:/backups/BJ-TOXFSREPOSITORY#

Furthermore, if we compile check again space we are using in disk:

df -h . Filesystem Size Used Avail Use% Mounted on /dev/sdb 500G 9.4G 491G 2% /backups

And compared to what our files actually weigh, we can see the significant disk savings

ls -hsl total 12G 80K -rw-rw-rw- 1 root 79K Mar 19 02:23 BJ-TOXFSREPOSITORY.vbm 4.2G -rw-r--r--- 1 root 4.2G Mar 19 00:11 PHP-IPAM.vm-6400D2020-03-19T000537_1279.vbk 19M -rw-r--r--- 1 root 19M Mar 19 00:15 PHP-IPAM.vm-6400D2020-03-19T001355_0B05.vib 542M -rw-r--r--- 1 root 542M Mar 19 00:58 PHP-IPAM.vm-6400D2020-03-19T005646_0FE7.vib 534M -rw-r--r--- 1 root 534M Mar 19 01:03 PHP-IPAM.vm-6400D2020-03-19T010158_B246.vib 19M -rw-r--r--- 1 root 19M Mar 19 01:34 PHP-IPAM.vm-6400D2020-03-19T013342_68BA.vib 534M -rw-r--r--- 1 root 534M Mar 19 01:39 PHP-IPAM.vm-6400D2020-03-19T013836_3A00.vib 19M -rw-r--r--- 1 root 19M Mar 19 02:06 PHP-IPAM.vm-6400D2020-03-19T020528_3FF4.vib 18M -rw-r--r--- 1 root 18M Mar 19 02:09 PHP-IPAM.vm-6400D2020-03-19T020808_AE69.vib 19M -rw-r--r--- 1 root 19M Mar 19 02:15 PHP-IPAM.vm-6400D2020-03-19T021045_F898.vib 5.6G -rw-r--r--- 1 root 5.6G Mar 19 02:22 PHP-IPAM.vm-6400D2020-03-19T022233_2081.vbk 19M -rw-r--r--- 1 root 19M Mar 19 02:20 PHP-IPAM.vm-6400D2020-03-20T021700_28C1.vib

Just friends, remember that you can download Veeam Backup & Replication from the following link:

Must-read Links

I will leave here a few really interesting links to bear in mind:

Hi Jorge,

Cool stuff here, but one thing I cannot find in Veeam site is the sizing recommended for using Linux XFS with Reflink, can you help sort this out? as per Refs its recommended 1GB RAM per 1TB, But nothing is mentioned for XFS with Reflink.

Regards

Pedro

Hello Pedro,

There is not much information on the Internet about it. I will like to think that it will tend to consume some less RAM than ReFS, as it does use 4K blocks, but I will keep it to 1TB/1GB RAM just in case, and monitor it with Grafana or similars.

Let me know if you find some information, I couldn’t and I was looking for days on this.

Hi Jorge, could the above be applied to copying entire XFS repositories that CloudConnect write to?

i.e. Create a Backup Copy of the Linux server that has the XFS mount and set the destination to a similar Linux server at a remote site with the same size XFS mount?

Hello,

Well this is pretty basic, as long as you have it in different volumes and folders, yes.