Greetings everyone, this blog post is a bit different, as it is to spread the awareness of a potential issue that might affect your environment if you, or your customers, upgrade to the latest ESXi 7.0 Update 3 Build 18644231 to be more precise.

Greetings everyone, this blog post is a bit different, as it is to spread the awareness of a potential issue that might affect your environment if you, or your customers, upgrade to the latest ESXi 7.0 Update 3 Build 18644231 to be more precise.

Important News: VMware has pulled ESXi 7.0 Update 3, Update 3a, and Update 3b.

What’s New

- ESXi 7.0 Update 3a delivers a fix for large UNMAP requests on thin-provisioned VMDKs that might cause ESXi hosts to fail with a purple diagnostic screen. For more information, see the Resolved Issues section.

- If your source system contains the ESXi 7.0 Update 2 release (build number 17630552) or later builds with Intel drivers, before upgrading to ESXi 7.0 Update 3a, check VMware knowledge base article 85982 for workarounds related to vSphere Lifecycle Manager. For more information, see the Known Issues section.

Update today if you were in ESXi 7.0U3.

Everything you need to know, what is happening, official KB debrief, etc. In video

What is happening?

On their official KB 86100, they say the next “Thin provisioned virtual disks (VMDKs) residing on VMFS6 datastores, may cause multiple hosts in an HA cluster to fail with a purple diagnostic screen.”

Additionally, VMware has narrowed the issue to happen to what they mention aged datastores, without specifying what they mean with that:

In ESXi 7.0.3 release VMFS added a change to have uniform UNMAP granularities across VMFS & SE Sparse snapshot. As a part of this change maximum UNMAP granularity reported by VMFS was adjusted to 2GB. A TRIM/UNMAP request of 2GB issued from Guest OS can in rare situations result in a VMFS metadata transaction requiring lock acquisition of a large number of resource clusters (greater then 50 resources) which is not handled correctly in resulting in an ESXi PSOD. VMFS metadata transaction requiring lock actions on greater then 50 resource clusters is not common and can happen on aged datastores. This concern only impacts Thin Provisioned VMDKs, Thick, and Eager Zero Thick VMDKs are not impacted.

Thin-provisioned, and “aged datastores” (which I am assuming came from previous vSphere versions, VMFS5 or early VMFS6) are really a common use case out there, I have not seen many environments with all the VMs in thick mode, for obvious reasons, as every business will always try to squeeze as many VMs as possible, with moderation, on the same hardware (that is the very best foundation of virtualization, right? abstract the underline hardware and present it virtually to do more on the same hardware)

More information can be found on the VMware Communities where other Customers are sharing good stuff – https://communities.vmware.com/t5/ESXi-Discussions/ESXi-7-0-3-PSOD/td-p/2870776

This specific issue might only affect you in the case that you upgrade to ESXi 7.0.3, ESXi 7.0 Update 3, Build 18644231.

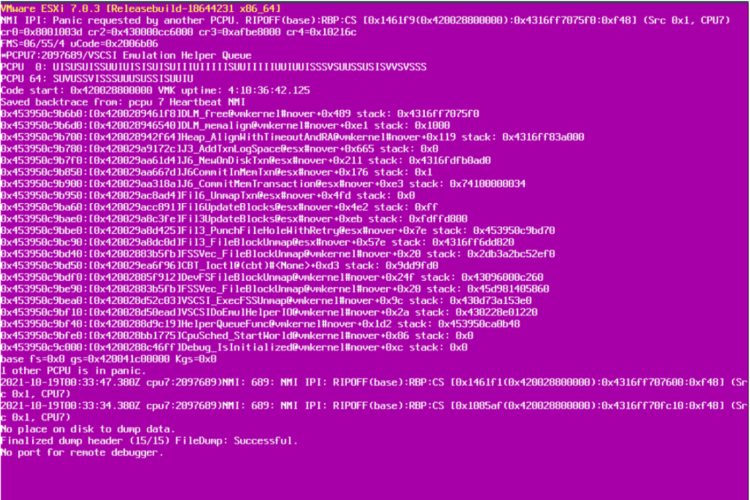

How does it look like?

The issue will trigger a PSOD (Purple Screen of Death) like this one, which is never pleasant to see. And it will force a reboot of the ESXi, until it is triggered again if the ESXis is in DRS, or have HA, the thin-provisioned VM will be vMotion to another host and trigger the same issue on the other Host, and that in a loop until all ESXis are down, the issue mentioned that it is probably when VMs which are thin-provisioned are booted up (not every time it seems):

OMG, what can I do?

First, breathe, I know if you have ended on the official KB, or here, you might be panicking, there is not much you can do, but still, there are three options for now:

- Downgrade your ESXi to 7.0.2, or to the build you were having before doing the Upgrade. There is a good KB to follow if this is your desired path – https://kb.vmware.com/s/article/1033604

- Convert all the thin-provisioned VMs to thick-provisioned. Here is where I imagine yourself asking the business for another array, to accommodate that 30/40% room to maneuver that thin-provisioned has given you all these years.

- Disable TRIM/UNMAP in the Guest OS – Note: Please consult OS documentation on how to adjust TRIM/UNMAP features for a complete understanding of the OS-specific configurations needs. Note: functions and capabilities may vary across distributions and versions based on OS specifics.Examples: https://ikb.vmware.com/s/article/2150591, https://www.suse.com/support/kb/doc/?id=000019447

As a fourth option, I am sure VMware will release a Hotfix, or an ESXi patch soon to address this issue, until then. Stay tuned.

Hope this blog is useful, and I hope you read it before you go and do the upgrade.

7.0.3 is no more available… 🙂

Imagine that 🙂 I totally understand, there are just too many bugs, SD errors (not warnings just failing SD), these thin-provisioned VMs, and tons of others. Will edit the disclaimer.