Greetings friends, on previous articles, I showed you how to install Ollama and download your first models. A few weeks ago DeepSeek-R1 took the Internet by surprise with it-s incredible reasoning model. You can of course give it a try on the official website or downloading the mobile application. DeepSeek mark a significant step forward in how reinforcement learning (RL) can shape large language models, and the results are really exciting. Let’s break it down on this article.

Greetings friends, on previous articles, I showed you how to install Ollama and download your first models. A few weeks ago DeepSeek-R1 took the Internet by surprise with it-s incredible reasoning model. You can of course give it a try on the official website or downloading the mobile application. DeepSeek mark a significant step forward in how reinforcement learning (RL) can shape large language models, and the results are really exciting. Let’s break it down on this article.

What is DeepSeek-R1-Zero

Before we jump into the great stuff, let’s start with a brief overview of what DeepSeek-R1-Zero is. We are talking about an AI model trained using large-scale reinforcement learning (RL) alone, with no supervised fine-tuning (SFT) as an initial step. The results are outstanding. The model exhibits strong reasoning skills and naturally develops powerful capabilities like self-verification, reflection, and complex chain-of-thought (CoT) generation. Where before we needed to create this chain-of-thought manually, with DeepSeek-R1 we can observe how to model comes to conclusions and what to do next by itself!

There is a small caveat, DeepSeek-R1-Zero struggles with endless repetition, poor readability, and language mixing. So the guys at DeepSeek achieved an impressive reasoning, which was good as a first step.

What is DeepSeek-R1

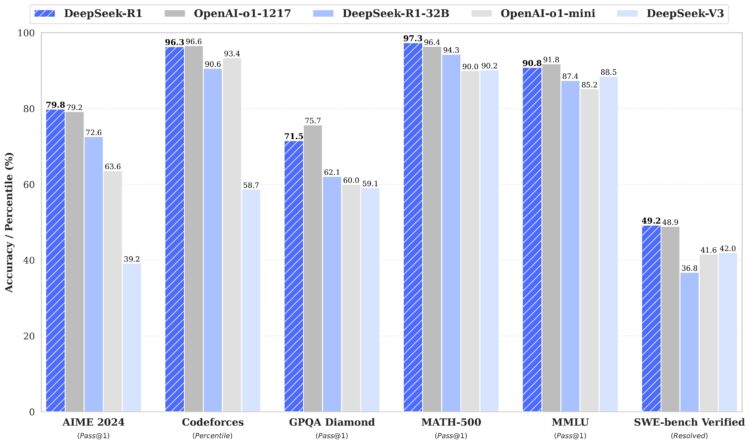

To address the limitations mentioned above, and even further refining of the model’s reasoning abilities, these guys introduced DeepSeek-R1. This model incorporates cold-start data before RL, helping to mitigate the readability issues while maintaining the strong reasoning patterns discovered in DeepSeek-R1-Zero. So that is what we can see as GA with DeepSeek-R1, one of the best AI models, delivering performance on par with OpenAI-o1 across math, coding, and reasoning benchmarks.

Open-Source? Secure?

If you have been following this blog, if I can have something open-source vs proprietary, I will chose open-source. Not just that, Since Meta released (leaked) Llama, everything changed in the AI space, where only a few controlled the whole ecosystem.

There has been recently countless number of posts regarding how DeepSeek uses your data, and how much data it collects. I mean, if you know just a little bit about Internet, you should know by now that the phones and the smart speakers listen to the conversations, that Google scans all your Inbox and searches to offer you tailored ads, etc. OpenAI does exactly the same as DeepSeek, but instead of send the data to China, it send it to the United States. Look, I understand the concerns, especially if the questions you are going to ask, or the material you are going to pass is considered sensitive, or you are under some regulations, or of course, sending Intellectual Proprietary material, let’s say your current employer private information.

That is why DeepSeek did it right, as they have open-sourced both DeepSeek-R1-Zero and DeepSeek-R1, along with six distilled models based on Llama and Qwen.

So, my personal recommendation if you want to keep your data secure, please run open source, private and local models as much as you can. Let’s take a look at the distilled models that DeepSeek has released, in the case that you are asking yourselves what a distilled model is: distill models are fine-tuned based on open-source models, using samples generated by DeepSeek-R1.

| Model | Base Model | |

|---|---|---|

| DeepSeek-R1-Distill-Qwen-1.5B | Qwen2.5-Math-1.5B | |

| DeepSeek-R1-Distill-Qwen-7B | Qwen2.5-Math-7B | |

| DeepSeek-R1-Distill-Llama-8B | Llama-3.1-8B | |

| DeepSeek-R1-Distill-Qwen-14B | Qwen2.5-14B | |

| DeepSeek-R1-Distill-Qwen-32B | Qwen2.5-32B | |

| DeepSeek-R1-Distill-Llama-70B | Llama-3.3-70B-Instruct |

Right, let’s finally unlock the full power of our NVIDIA Jetson Orin Nano and run DeepSeek.

How-to Download and run DeepSeek-R1, securely, and private using Ollama

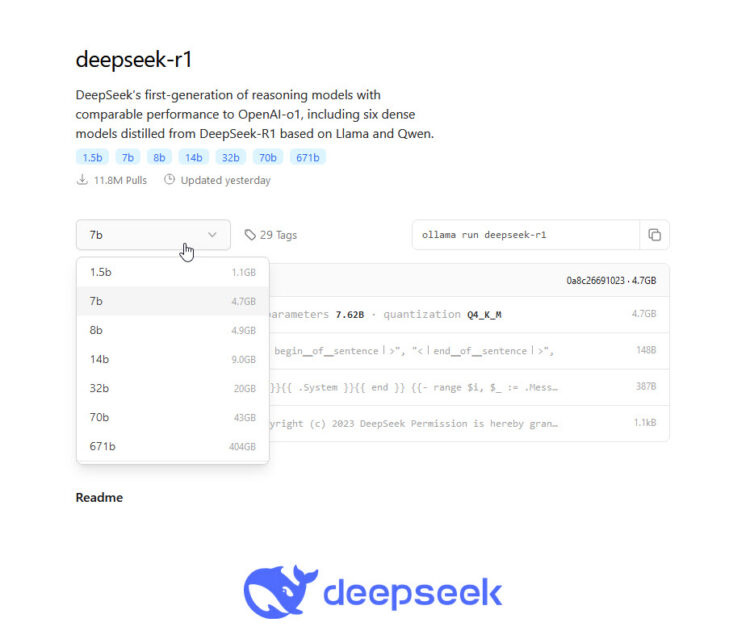

First, let’s go to the Ollama website to see what models we can download and run for our Jetson Nano:

There are a few models, I have tried the 1.5B and 7B ones, if I try running the 8B, it is too much for the unit as the OS it is using some RAM as well. But of course if you have enough RAM/VRAM I would totally recommend 32B onwards. Once we know the model we want, let’s go to our NVIDIA Jetson Nano and run the next:

There are a few models, I have tried the 1.5B and 7B ones, if I try running the 8B, it is too much for the unit as the OS it is using some RAM as well. But of course if you have enough RAM/VRAM I would totally recommend 32B onwards. Once we know the model we want, let’s go to our NVIDIA Jetson Nano and run the next:

ollama run deepseek-r1:1.5b

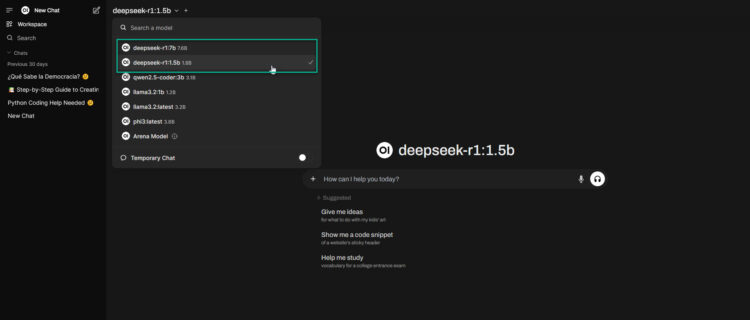

We will see how the model downloads, and that’s it! I mean, this is always so simple that it blows my mind. Let’s login now to our Open WebUI, which I covered as well on previous blog post. And we can now select the model, or models that we want:

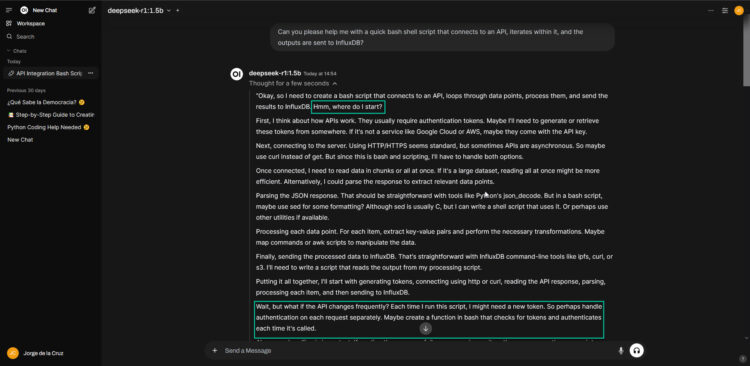

Let’s give it a quick try to see if it can help us coding something simple, we will start seeing the reasoning process, with a few little nuggets that reads very cool:

Let’s give it a quick try to see if it can help us coding something simple, we will start seeing the reasoning process, with a few little nuggets that reads very cool:

And let’s check the result, it might not be perfect or very complete but the thought process is what we are evaluating. Especially the smaller models, can help whenever you pass a great and simple task to solve at a time. Try the 7B for a much better results.

And let’s check the result, it might not be perfect or very complete but the thought process is what we are evaluating. Especially the smaller models, can help whenever you pass a great and simple task to solve at a time. Try the 7B for a much better results.

That’s it for today, I hope you can give this a try, wherever it is DeepSeek, or any other model, as said previously, my recommendation is to always run it locally whenever possible.

Leave a Reply