Five years ago, on the Spanish blog site, my colleague Oscar Mas prepared for all the Spanish readers a great blog series about Kubernetes:

Five years ago, on the Spanish blog site, my colleague Oscar Mas prepared for all the Spanish readers a great blog series about Kubernetes:

It was extremely early days, and although it had traffic, and helped a lot of people to cut their teeth into the Kubernetes world, as said, it was probably too early to even discuss how to deploy Kubernetes Clusters on vanilla Hardware. So this brings us to this blog entry, a new, up to date, way to configure your two-node Kubernetes Cluster.

System Requirements

The System Requirements will vary depending on the usage you will give to the Kubernetes Cluster, to start with, will give the next resources as a minimum:

- 4 vCPU

- 12 GB RAM

- 20GB OS

- VMXNET3 and PVSCSI if using VMware

- Ubuntu 20.04 LTS

We will need the basics like sudo or root privileges, apt, and of course a command line/terminal window of your choice. Plus better if you configure static IP address on both servers.

Best Practices for the Operating System

There are a few best practices to take into consideration for this small two-node cluster, first of all, please disable the swap memory on both servers:

sudo swapoff -a sudo rm /swap.img sed "-i.bak" '/swap.img/d' /etc/fstab

In case the command to delete the swap.img from fstab doesn’t work, just edit the file and remove the line /swap.img none swap sw 0 0

Then, on the control plane server, set up a proper hostname:

sudo hostnamectl set-hostname cp-node

And do the same on the worker node:

sudo hostnamectl set-hostname worker-node

Now, on both servers, add the proper /etc/hosts resolution, as we will do this without a DNS Server:

echo "192.168.xx.xx cp-node.example.com cp-node" >> /etc/hosts echo "192.168.xx.xx worker-node.example.com worker-node" >> /etc/hosts

Reboot both servers, as we are pretty much ready to start with the complex steps.

How to install Docker

I have preferred to keep using Docker for now, although I am conscious that cri-o would be probably the defacto container runtime within a few months.

Please run these steps on both servers.

As per usual, let’s start with the basics, which is to update our packages:

sudo apt-get update && sudo apt-get upgrade

The next step, as it has been simplified a lot, is to install Docker, as simple as:

sudo apt-get install docker.io

Once finished, we can quickly check that Docker has been properly installed, and even check the version we are running:

docker version

You should see something like this output:

Client: Version: 20.10.7 API version: 1.41 Go version: go1.13.8 Git commit: 20.10.7-0ubuntu5~20.04.2 Built: Mon Nov 1 00:34:17 2021 OS/Arch: linux/amd64 Context: default Experimental: true Server: Engine: Version: 20.10.7 API version: 1.41 (minimum version 1.12) Go version: go1.13.8 Git commit: 20.10.7-0ubuntu5~20.04.2 Built: Fri Oct 22 00:45:53 2021 OS/Arch: linux/amd64 Experimental: false containerd: Version: 1.5.5-0ubuntu3~20.04.1 GitCommit: runc: Version: 1.0.1-0ubuntu2~20.04.1 GitCommit: docker-init: Version: 0.19.0 GitCommit:

By default Docker will not be launched at boot, as it will be mandatory for a Kubernetes Cluster to have this running on every boot, please enable it by running the next:

sudo systemctl enable docker

Let’s check now that Docker is running:

sudo systemctl status docker

It should show something like this:

â— docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2022-01-22 15:00:22 UTC; 3 days ago

Docs: https://docs.docker.com

Main PID: 8210 (dockerd)

Tasks: 19

CGroup: /system.slice/docker.service

└─8210 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Have you run all the commands on both servers? If so, please move to the next section.

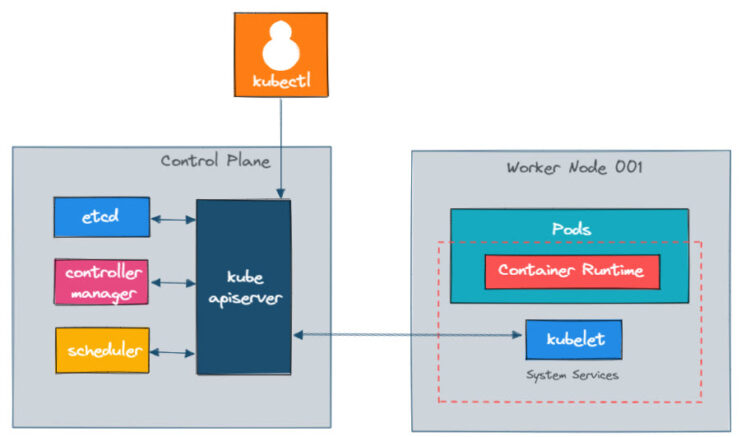

How to install and Configure Kubernetes

We are going to use the official repositories from Google, so the first step is to add the Kubernetes signing Key. With this, we will verify that the packed we download from the Google Repository are original. On both servers.

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add

We are using Ubuntu 20.04, you should have curl installed by now, in case that command fails, please go ahead and install curl:

sudo apt-get install curl

Now it is time to add the Kubernetes Software Repositories, as simple as running the next command:

sudo apt-add-repository "deb http://apt.kubernetes.io/ kubernetes-xenial main"

Important! The latest version of Kubernetes at the time of writing the article is 1.23, but a lot of applications, for example, Kasten, are not yet ready for the latest edition, so I will show you here how to install a specific Kubernetes version, for example, 1.22.

Let’s run now apt to see which versions are available for us:

apt list -a kubeadm

It should be something similar to this:

Listing... Done kubeadm/kubernetes-xenial 1.23.3-00 amd64 [upgradable from: 1.23.2-00] kubeadm/kubernetes-xenial,now 1.23.2-00 amd64 [installed,upgradable to: 1.23.3-00] kubeadm/kubernetes-xenial 1.23.1-00 amd64 kubeadm/kubernetes-xenial 1.23.0-00 amd64 kubeadm/kubernetes-xenial 1.22.6-00 amd64 kubeadm/kubernetes-xenial 1.22.5-00 amd64 kubeadm/kubernetes-xenial 1.22.4-00 amd64 kubeadm/kubernetes-xenial 1.22.3-00 amd64 kubeadm/kubernetes-xenial 1.22.2-00 amd64 kubeadm/kubernetes-xenial 1.22.1-00 amd64 kubeadm/kubernetes-xenial 1.22.0-00 amd64 kubeadm/kubernetes-xenial 1.21.9-00 amd64 kubeadm/kubernetes-xenial 1.21.8-00 amd64 kubeadm/kubernetes-xenial 1.21.7-00 amd64 kubeadm/kubernetes-xenial 1.21.6-00 amd64 kubeadm/kubernetes-xenial 1.21.5-00 amd64 kubeadm/kubernetes-xenial 1.21.4-00 amd64 kubeadm/kubernetes-xenial 1.21.3-00 amd64 kubeadm/kubernetes-xenial 1.21.2-00 amd64 kubeadm/kubernetes-xenial 1.21.1-00 amd64 kubeadm/kubernetes-xenial 1.21.0-00 amd64 kubeadm/kubernetes-xenial 1.20.15-00 amd64 kubeadm/kubernetes-xenial 1.20.14-00 amd64 kubeadm/kubernetes-xenial 1.20.13-00 amd64 kubeadm/kubernetes-xenial 1.20.12-00 amd64 kubeadm/kubernetes-xenial 1.20.11-00 amd64 kubeadm/kubernetes-xenial 1.20.10-00 amd64

Some more information about Kubernetes Releases can be found on the Wikipedia image:

And as we will like to get the latest 1.22 with the latest patch and all, as simple then as running:

sudo apt install -y kubeadm=1.22.6-00 kubelet=1.22.6-00 kubectl=1.22.6-00

If you want to install the latest version, just omit the version and run the same line, that will install the latest available.

Now, Kubernetes is not a small application you want to mess about installing updates, hence it will be recommended to mark the packages as hold, so it does not get any automatic updates:

sudo apt-mark hold kubeadm kubelet kubectl

After everything is finished, please run the next command to check that you are on the Kubernetes version you want:

kubeadm version

And this should be the output, according to the version you selected, on my case the latest 1.22 at the moment:

kubeadm version: &version.Info{Major:"1", Minor:"22", GitVersion:"v1.22.6", GitCommit:"f59f5c2fda36e4036b49ec027e556a15456108f0", GitTreeState:"clean", BuildDate:"2022-01-19T17:31:49Z", GoVersion:"go1.16.12", Compiler:"gc", Platform:"linux/amd64"}

Almost there, but first, as we are using Docker, we need to change the Docker Cgroup Driver.

Quickly changing the Docker Cgroup Driver

If you skip this step, while using Docker, and you initialize the Cluster, you will get an error saying that Docker group driver detected cgroupfs instead systemd, etc. It is very easy to do, on both components from our Cluster, and future workers run the next:

sudo cat <<EOF | sudo tee /etc/docker/daemon.json

{ "exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts":

{ "max-size": "100m" },

"storage-driver": "overlay2"

}

EOF

Restart Docker with the next command:

sudo systemctl restart docker

It will be wise to check now that Docker is using the recommended version of cgroup, as simple as running this command, should show you something similar:

sudo docker info |grep -i cgroup Cgroup Driver: systemd Cgroup Version: 1

Initializing the Kubernetes Master Node Only

Well, we are ready to run the final command and initialize the Kubernetes Cluster, you could prepare a long command, or better, just use a YAML file with all the configurations, create a new file called kubeadm-config.yaml and add the next config inside:

apiVersion: kubeadm.k8s.io/v1beta3 kind: ClusterConfiguration kubernetesVersion: 1.22.6 #<-- Use the word stable for newest version controlPlaneEndpoint: "cp-node:6443" #<-- Use the node alias not the IP networking: podSubnet: 192.168.5.0/16 #<-- Add the range you like --- kind: KubeletConfiguration apiVersion: kubelet.config.k8s.io/v1beta1 cgroupDriver: systemd

Now that we have everything ready, we can Initialize the Kubernetes Cluster:

kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.out

If everything goes as expected, at the end of the process you will have something similar to this, with all the needed info to add workers:

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.102:6443 --token n4h66n.pnxmx3klgjhfofjx \

--discovery-token-ca-cert-hash sha256:4a53457518ca28d3b825c212a3eefa63affc4c3efea5f3a3f2d54b9b3b59cf26

I will recommend following the next steps on the CP Node, but really you can do it on other terminals from where you want to control the Cluster:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

We have everything ready, we can move now to the Worker Node work.

As a Networking, we will use Calico, it will be as simple as running the next command:

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

Verify that everything is working as expected, and things are running:

kubectl get pods --all-namespaces

We should get something like this:

NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-958545d87-cwgxr 1/1 Running 0 2m6s kube-system calico-node-swd27 1/1 Running 0 2m6s kube-system coredns-78fcd69978-b5q89 1/1 Running 0 15m kube-system coredns-78fcd69978-rkr24 1/1 Running 0 15m kube-system etcd-cp-node 1/1 Running 3 15m kube-system kube-apiserver-cp-node 1/1 Running 3 15m kube-system kube-controller-manager-cp-node 1/1 Running 1 15m kube-system kube-proxy-wjxx6 1/1 Running 0 15m kube-system kube-scheduler-cp-node 1/1 Running 3 15m

Cracking job! You have your Control Plane Node ready, and the Cluster running.

How to join Worker Node to Kubernetes Cluster

Adding worker nodes is extremely simple, and it of course helps us to expand our capabilities to run more Namespaces, test HA, etc. We will need to have a valid token to join, the default timeout for this it is two hours, which might have been expired if you have taken a break or something, let’s check this on the cp-node:

sudo kubeadm token list ## Which gives the next output TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS 11qk5n.s4ffkj7npoob1pz8 22h 2022-02-01T11:09:43Z authentication,signing <none> system:bootstrappers:kubeadm:default-node-token 7ug3l5.u4cvbq0mzw6vluim 20m 2022-01-31T13:09:42Z <none> Proxy for managing TTL for the kubeadm-certs secret <none>

So, let’s create a new one just in case:

sudo kubeadm token create ## Take note of this token h5dfsp.9pke6ekoq83zv4wp

We will need another part, which is the CA Cert Hash, to obtain it, just copy-paste this command:

openssl x509 -pubkey \ -in /etc/kubernetes/pki/ca.crt | openssl rsa \ -pubin -outform der 2>/dev/null | openssl dgst \ -sha256 -hex | sed 's/ˆ.* //'

It will give you something like this:

(stdin)= 6cb0ce0ef9e7af73f845960c3ca654071e3892c72486f1ea018ab12f60ee34d1

Assuming you have the DNS correctly as mentioned before, and both servers can talk between each other, now you can simply run the next command on the worker node:

sudo kubeadm join \ --token h5dfsp.9pke6ekoq83zv4wp \ cp-node:6443 \ --discovery-token-ca-cert-hash \ sha256:6cb0ce0ef9e7af73f845960c3ca654071e3892c72486f1ea018ab12f60ee34d1

And that’s it! You have now your Kubernetes Cluster working, with a cp node, plus a worker node.

Testing that everything works as expected

Now, to see that we have two nodes, and all are working fine, let’s run through a few commands that might help to troubleshoot and see the Cluster.

Checking the Nodes of the Cluster:

kubectl get nodes NAME STATUS ROLES AGE VERSION cp-node Ready control-plane,master 113m v1.22.6 worker-node Ready <none> 4m7s v1.22.6

Let’s see a bit more of the new worker node, to achieve this, we can use the next command:

kubectl describe node worker-node

The result should be something like this:

Name: worker-node

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=worker-node

kubernetes.io/os=linux

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /var/run/dockershim.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 192.168.1.103/24

projectcalico.org/IPv4IPIPTunnelAddr: 192.168.168.128

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Mon, 31 Jan 2022 12:58:59 +0000

Taints: <none>

Unschedulable: false

Lease:

HolderIdentity: worker-node

AcquireTime: <unset>

RenewTime: Mon, 31 Jan 2022 13:04:26 +0000

Conditions:

Type Status LastHeartbeatTime LastTransitionTime Reason Message

---- ------ ----------------- ------------------ ------ -------

NetworkUnavailable False Mon, 31 Jan 2022 12:59:40 +0000 Mon, 31 Jan 2022 12:59:40 +0000 CalicoIsUp Calico is running on this node

MemoryPressure False Mon, 31 Jan 2022 12:59:59 +0000 Mon, 31 Jan 2022 12:58:59 +0000 KubeletHasSufficientMemory kubelet has sufficient memory available

DiskPressure False Mon, 31 Jan 2022 12:59:59 +0000 Mon, 31 Jan 2022 12:58:59 +0000 KubeletHasNoDiskPressure kubelet has no disk pressure

PIDPressure False Mon, 31 Jan 2022 12:59:59 +0000 Mon, 31 Jan 2022 12:58:59 +0000 KubeletHasSufficientPID kubelet has sufficient PID available

Ready True Mon, 31 Jan 2022 12:59:59 +0000 Mon, 31 Jan 2022 12:59:29 +0000 KubeletReady kubelet is posting ready status. AppArmor enabled

Addresses:

InternalIP: 192.168.1.103

Hostname: worker-node

Capacity:

cpu: 4

ephemeral-storage: 19475088Ki

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 16393520Ki

pods: 110

Allocatable:

cpu: 4

ephemeral-storage: 17948241072

hugepages-1Gi: 0

hugepages-2Mi: 0

memory: 16291120Ki

pods: 110

System Info:

Machine ID: f52bcceed8f246e0a8432e68b1b48cd7

System UUID: acb21042-3fdd-ce59-00fb-1b8032bea816

Boot ID: 8789b3e4-0ed6-4695-868c-49217ee3664c

Kernel Version: 5.4.0-96-generic

OS Image: Ubuntu 20.04.3 LTS

Operating System: linux

Architecture: amd64

Container Runtime Version: docker://20.10.7

Kubelet Version: v1.22.6

Kube-Proxy Version: v1.22.6

PodCIDR: 192.168.1.0/24

PodCIDRs: 192.168.1.0/24

Non-terminated Pods: (2 in total)

Namespace Name CPU Requests CPU Limits Memory Requests Memory Limits Age

--------- ---- ------------ ---------- --------------- ------------- ---

kube-system calico-node-bbrz6 250m (6%) 0 (0%) 0 (0%) 0 (0%) 5m30s

kube-system kube-proxy-2dzfm 0 (0%) 0 (0%) 0 (0%) 0 (0%) 5m30s

Allocated resources:

(Total limits may be over 100 percent, i.e., overcommitted.)

Resource Requests Limits

-------- -------- ------

cpu 250m (6%) 0 (0%)

memory 0 (0%) 0 (0%)

ephemeral-storage 0 (0%) 0 (0%)

hugepages-1Gi 0 (0%) 0 (0%)

hugepages-2Mi 0 (0%) 0 (0%)

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Starting 5m8s kube-proxy

Normal Starting 5m31s kubelet Starting kubelet.

Normal NodeHasSufficientMemory 5m30s (x2 over 5m30s) kubelet Node worker-node status is now: NodeHasSufficientMemory

Normal NodeHasNoDiskPressure 5m30s (x2 over 5m30s) kubelet Node worker-node status is now: NodeHasNoDiskPressure

Normal NodeHasSufficientPID 5m30s (x2 over 5m30s) kubelet Node worker-node status is now: NodeHasSufficientPID

Normal NodeAllocatableEnforced 5m30s kubelet Updated Node Allocatable limit across pods

Normal NodeReady 5m kubelet Node worker-node status is now: NodeReady

So that’s it, from here, you can start deploying applications, pods, whatever you need. A bit long, but hope you enjoyed the journey.

Very helpful!

Amazing post, it just works! Would like to see another post on how to install Rancher to manage this cluster…

Thanks, Jose Luis,

More posts will come for sure, thanks for writing!

Huge helpful!

Je vais essayer et je pense que c bien expliqué

Pour l’instant j’ai une api .net8 sdk net container docker et je veux comprendre comment fonctionne cet orchestrateur