Greetings friends, I have been showing you in this series of blogs about FreeNAS, how to deploy it on VMware vSphere in a very comfortable way, how to add an SSL certificate with Let’s Encrypt to publish FreeNAS services securely, and how to configure the Object Storage service of FreeNAS (based on MinIO) with just a few clicks.

Greetings friends, I have been showing you in this series of blogs about FreeNAS, how to deploy it on VMware vSphere in a very comfortable way, how to add an SSL certificate with Let’s Encrypt to publish FreeNAS services securely, and how to configure the Object Storage service of FreeNAS (based on MinIO) with just a few clicks.

To conclude the series, I’d like to talk about how we can combine what we’ve learned with Veeam Capacity/Cloud Tier.

Scale-Out Backup Repository – Basics

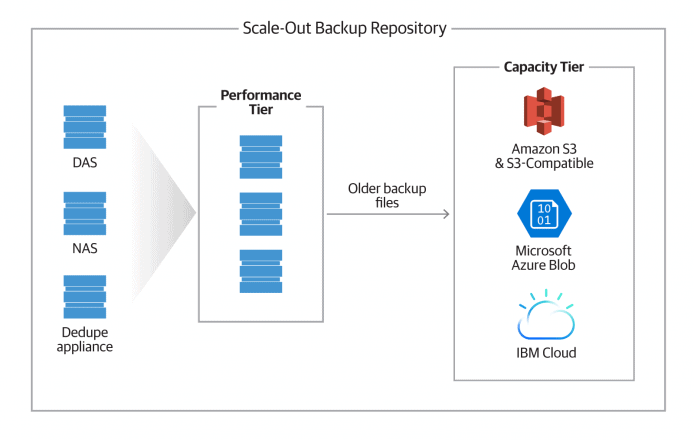

Before we go any further, it is important that we understand what we intend to do. Cloud/Capacity Tier builds on Veeam’s Scale-Out Backup Repository to combine Performance Tier and Capacity Tier.

If we saw it in a very simple diagram, we would have the following, a combination of local extents (Backup Repositories) called Performance Tier, to which is added a Capacity Tier based on Object Storage to which are sent the copies we don’t need to have in the performance tier:

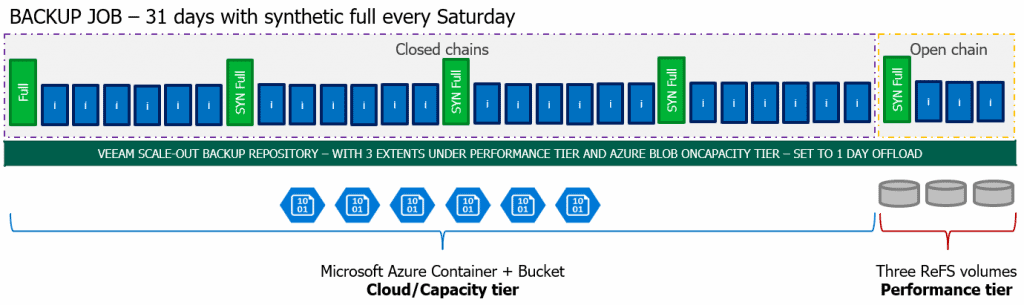

Backup Jobs Cloud/Capacity Tier

If we wanted to send for example our Backup Jobs retention policy of 30 days to a Capacity Tier, taking into account that we make a synthetic or full each week, this means that we would have something like that between performance tier and capacity tier: The advantages of this method is that on disk we would only have the open chain of backups, this is the last full and incremental, while everything else to complete those 31 days would be in Object Storage (which is uploaded to Cloud the next day that the chain is closed), for this is necessary to have good connectivity to FreeNAS. Remember that when the open backup chain of the 31st is closed, the first one we see on the left will be deleted from the cloud, and so on, respecting our retention policy.

The advantages of this method is that on disk we would only have the open chain of backups, this is the last full and incremental, while everything else to complete those 31 days would be in Object Storage (which is uploaded to Cloud the next day that the chain is closed), for this is necessary to have good connectivity to FreeNAS. Remember that when the open backup chain of the 31st is closed, the first one we see on the left will be deleted from the cloud, and so on, respecting our retention policy.

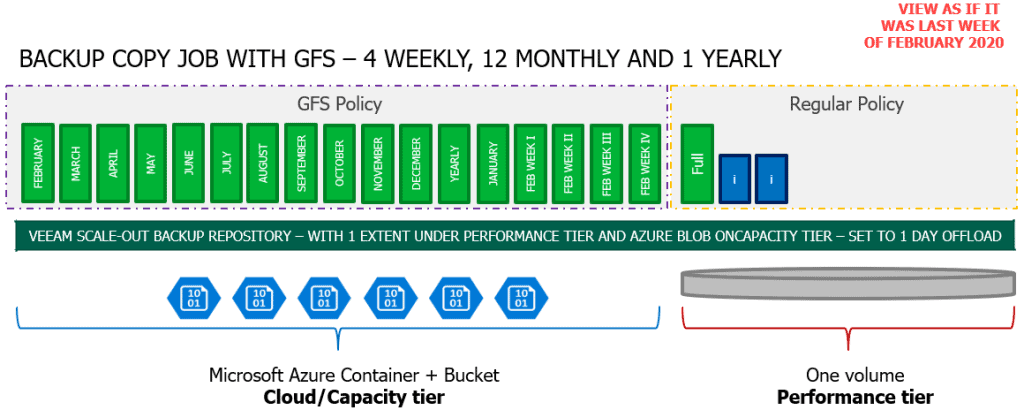

Cloud/Capacity Tier of Backup Copy Jobs with GFS

If we wanted to send for example our GFS policies, which perhaps makes more sense, we could send the weekly, monthly and yearly, it would look like this if we look within a year:

On disk we would only have the minimum that the Backup Copy Jobs need to create those full, and in Object Storage we would have one for each month, and one for each last week, if we had any yearly, it would also be clear there.

Now that we have clearer the whole concept of Scale-out Backup Repository and what is uploaded to Object Storage, let’s see the configuration steps in Veeam.

Object Storage Repository and Capacity Tier configuration at Veeam Backup & Replication v9.5 U4

Finally we would only have the Veeam configuration, remember that we can only send files that are complete, that their string is not open, for example from a Backup Copy Jobs string, those that are closed are the GFS that are created with the retention we select, in my case 4 Weekly, 12 Monthly and 1 Yearly, so that the files that comply with GFS and have been created, being full, are perfect to send.

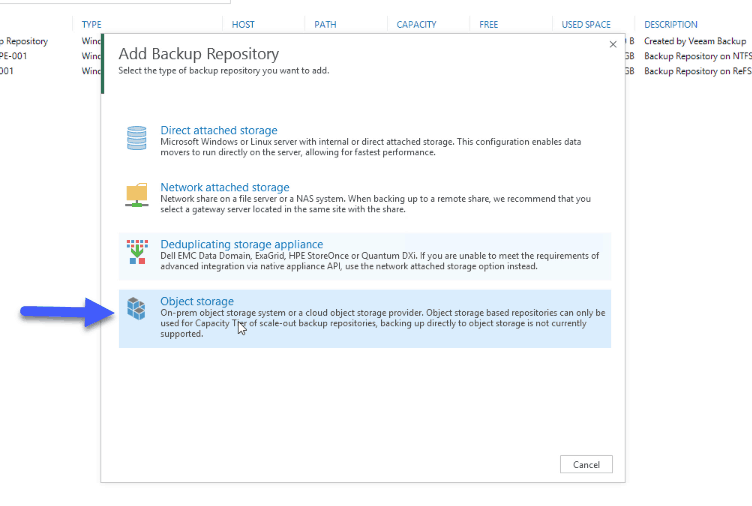

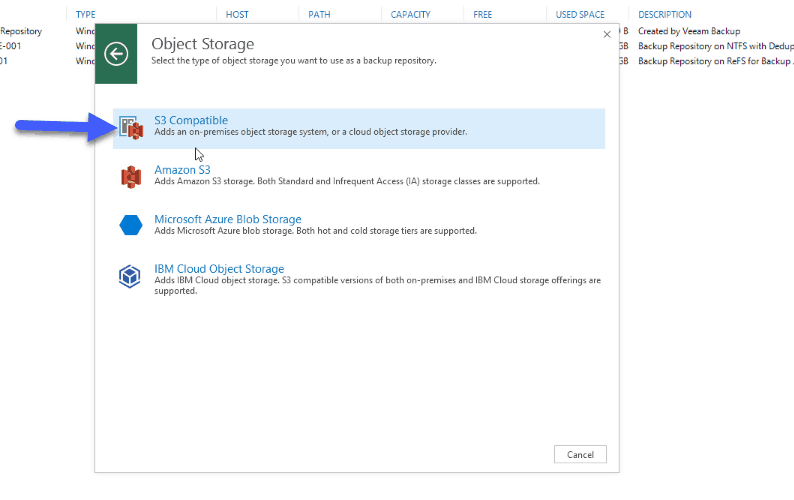

We will start by creating a Backup Repository of Object Storage type, pointing to this FreeNAS: Select S3 Compatible:

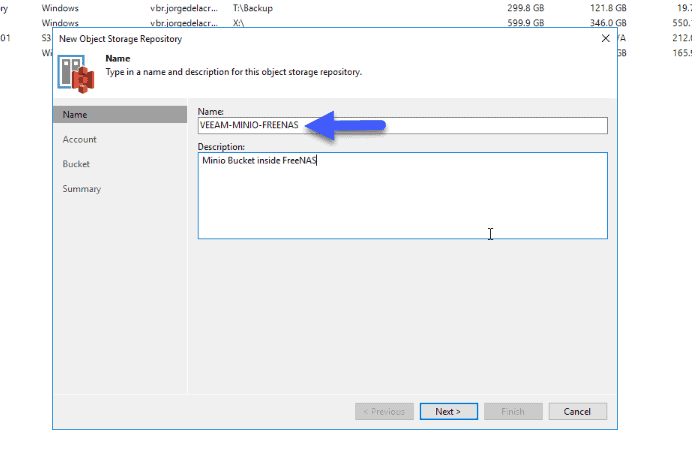

Select S3 Compatible:  Enter a name for your repository:

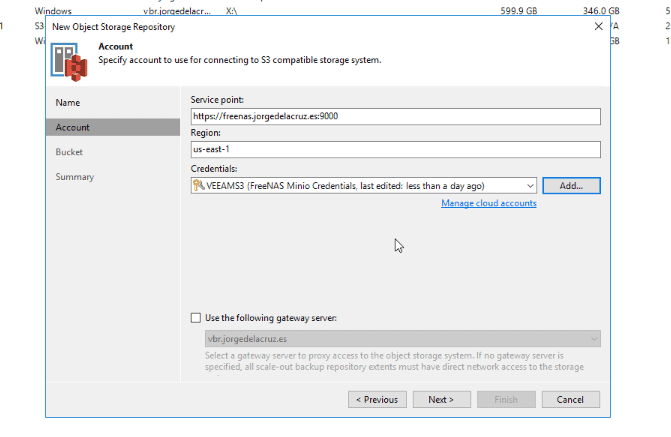

Enter a name for your repository: We will now introduce the Service point, for FreeNAS it is https://VUESTRAIP:9000, the region will be left as it is, and we will make sure that we have the credentials that we have created previously:

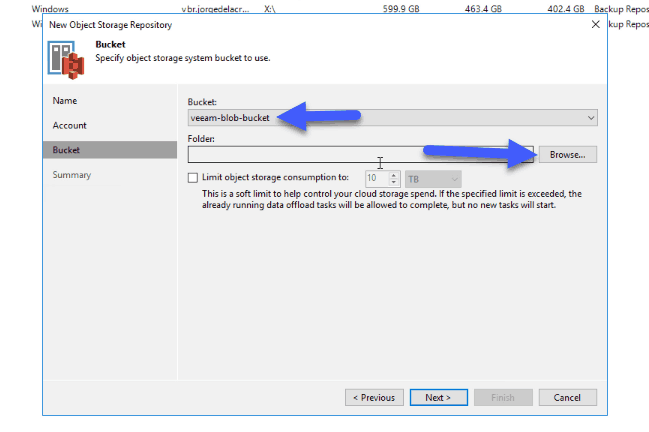

We will now introduce the Service point, for FreeNAS it is https://VUESTRAIP:9000, the region will be left as it is, and we will make sure that we have the credentials that we have created previously: We will see the Object Storage bucket, we will have to create a folder:

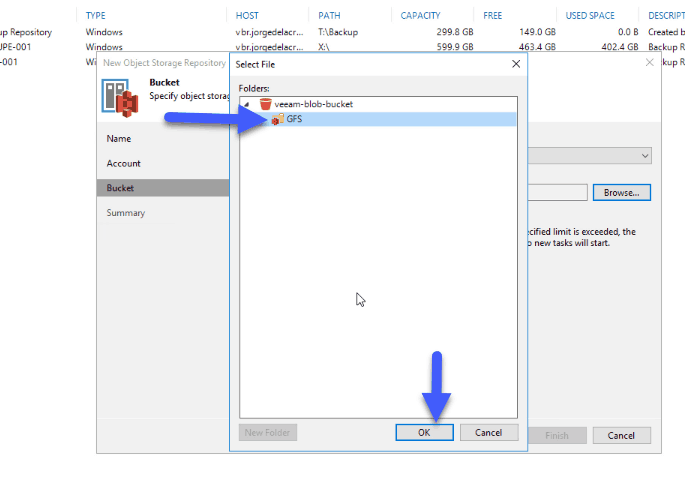

We will see the Object Storage bucket, we will have to create a folder:

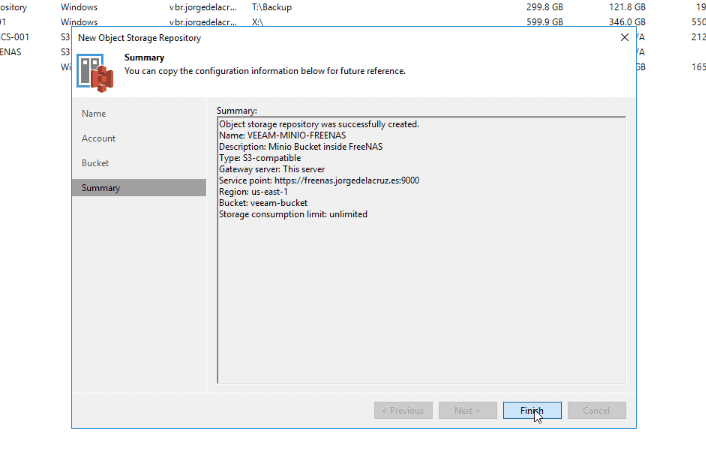

Once we have everything ready, we will click Finish:

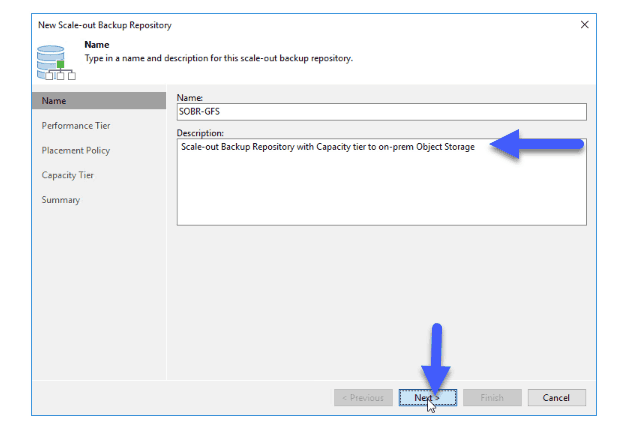

Once we have everything ready, we will click Finish:  The next and last step is to create a Scale-out Backup Repository, where we will combine local repositories, with Object Storage repositories, which is where we will do the Capacity Tier, we will do next:

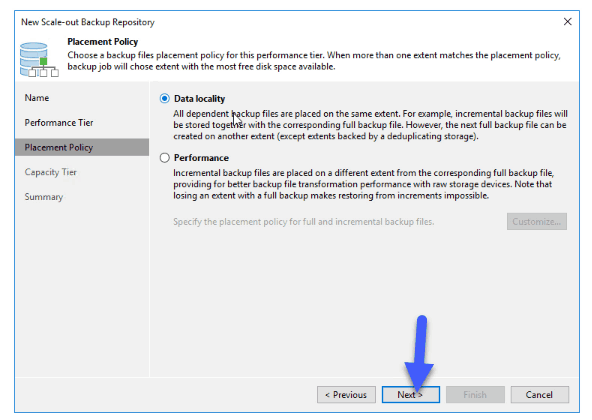

The next and last step is to create a Scale-out Backup Repository, where we will combine local repositories, with Object Storage repositories, which is where we will do the Capacity Tier, we will do next:  We will select from Performance Tier a repository where we will have the GFS copies, and we will leave the policy in Data Locality:

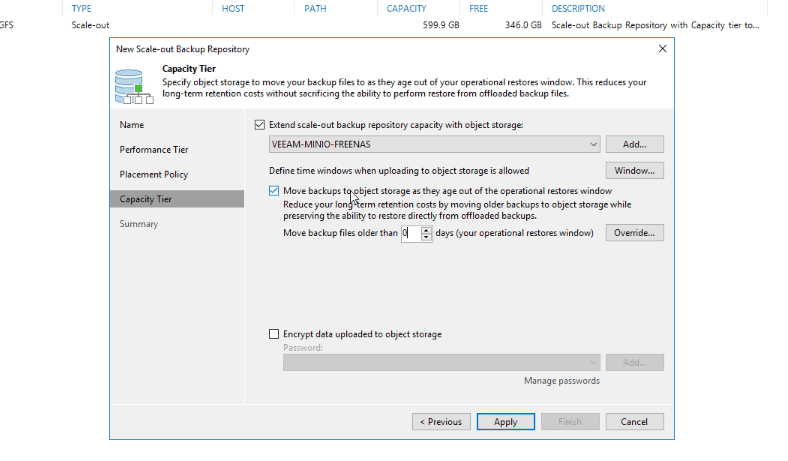

We will select from Performance Tier a repository where we will have the GFS copies, and we will leave the policy in Data Locality:  We will select in Capacity Tier the Object Storage Repository, and in my case I want to send everything that is already sealed and that is older than 0 days, this means that if it is Saturday and the weekly is created by policy, in a range of about four hours maximum this copy will be sent to this object storage:

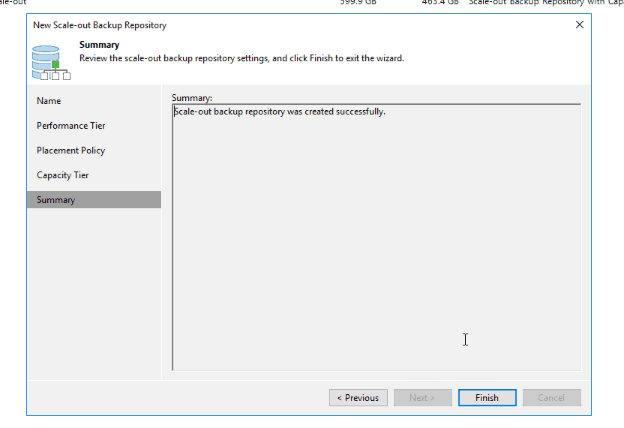

We will select in Capacity Tier the Object Storage Repository, and in my case I want to send everything that is already sealed and that is older than 0 days, this means that if it is Saturday and the weekly is created by policy, in a range of about four hours maximum this copy will be sent to this object storage: If all is well we will click on Finish:

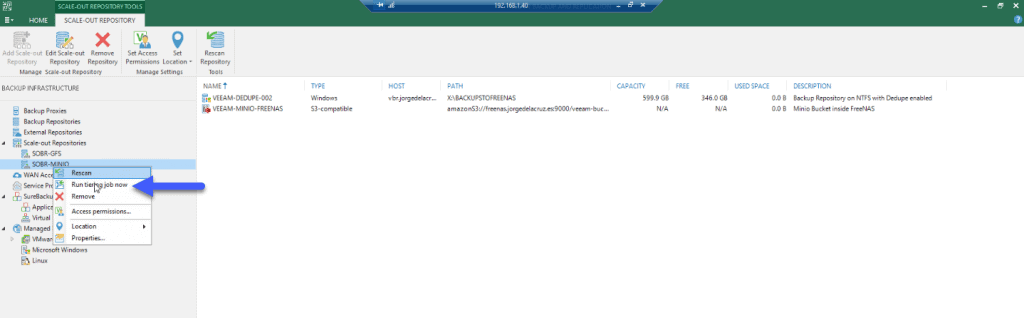

If all is well we will click on Finish:  Finally, we will do CTRL + Right button and I will execute the work in a manual way, since I want to send everything to my object storage:

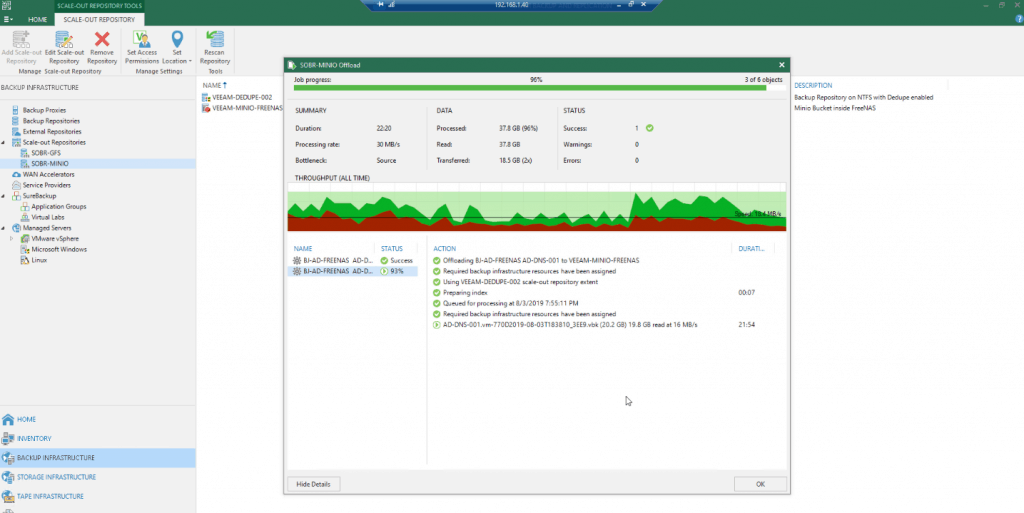

Finally, we will do CTRL + Right button and I will execute the work in a manual way, since I want to send everything to my object storage:  The work will begin, and since we have the Object Storage in our network, the performance is really powerful, it is another of the advantages, apart from the security, data protection, etc.

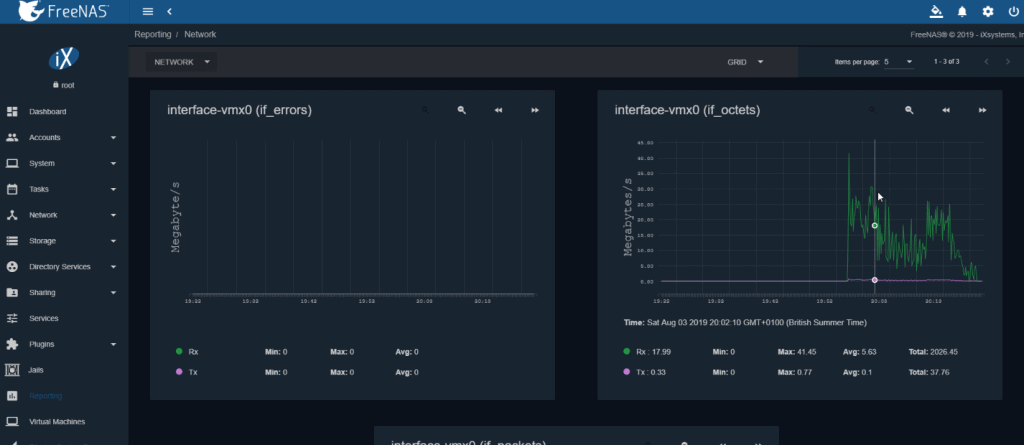

The work will begin, and since we have the Object Storage in our network, the performance is really powerful, it is another of the advantages, apart from the security, data protection, etc.  If we go back to our FreeNAS d we will be able to see the space consumption and the performance graph we will also be able to see how it moves.

If we go back to our FreeNAS d we will be able to see the space consumption and the performance graph we will also be able to see how it moves.  That’s all friends, I hope you like it, that you think about creating your own Object Storage in your laboratories for free and very powerful with FreeNAS and leave comments on the article.

That’s all friends, I hope you like it, that you think about creating your own Object Storage in your laboratories for free and very powerful with FreeNAS and leave comments on the article.

I leave you the whole menu with the entries on FreeNAS:

- FreeNAS: Initial installation and configuration of FreeNAS 11.x as VM within vSphere

- FreeNAS: Enable and configure Object Storage in FreeNAS 11.x compatible with S3 APIs – Based on MinIO

- FreeNAS: How to Deploy a Let’s Encrypt SSL Certificate in FreeNAS 11.x and HTTPS Configuration

- FreeNAS: Configure Veeam Backup Repository Object Storage connected to FreeNAS (MinIO) and launch Capacity Tier

Hi JORGE

Nice article, we are currently looking at testing this in our lab and by pure chance came across your article.

Now that it’s been a few months of using Freenas + ZFS and Minio how are you finding the ZFS performance?

Trolling through the FreeNAS and Veeam forums there have been mixed results with ZFS performance as a target but this is directly via CIFS or NFS share and S3 hasn’t been covered at all.

would be interested to discuss the project results in more detail.

“”Cheers

Gerardo

Hello Gerardo,

I have the opinion that it works as fast as you size it, FreeNAS has some recommendations when deploying it, if you are not doing anything fancy like dedupe or compress on the ZFS volumes, then the requirements are lower, but still when enabling minio that consumes more RAM. So, just take a look at the CPU and RAM requirements, and also disk, of course.

I am biased as I am running all on VSAN with NVMe and SSD, so you can imagine the performance of this system 🙂 It is just an abstraction of the resources you give to it. My recommendation? Give it a go, it is free 🙂

Hi George

We are looking to experiment with an all SSD ZFS config starting with 10 x 8 TB drives expandable to 24 on a single box.

It will be interesting to see where ZFS performance starts to degrade.

Thinking on FreeNAS just for the Object Storage part, or CIFS/NFS as well?

Hi Jorge

thanks for the encouragement 🙂

we will be playing with an all SSD ZFS box starting with 10 x 8 TB drives and eventually extend out to 24, trying to see where performance starts to degrade.

it will be interesting to see how it performs directly compared to using MinIO erasure coding which is meant to scale better than ZFS, less functional but scales much better.

So you’re not using this in production? was it just a Dev deployment ?

“”Cheers

G

Hello Gerardo,

That is right, just for my lab, and real backups of my Homelab and my Office 365 accounts, but I will not call this production, even if doing 50VMs Backups, and a few Office 365 accounts.

Thats a good question, it will depend on how FreeNas performs as a storage target for Veeam with backup and restore processes tested under load.

We are waiting for Veeam to come back to us for any best practises, according to the TrueNAS website it is Veeam certified so looking to get more information from Veeam on this and see what the ultimate config will be.

Not sure if using NFS iS preferred as Veeams > Freenas CIFS/ SMB implementation may be limited to SMB1 or 2 not sure.

If it works well we may look at augmenting it as a second or third tier backup repository to complement our StoreOnce repositories, since both MinIO and ZFS can replicate at different levels (bucket, dataset or pool levels) it may be an interesting fit for internal use cases and client side bucket replication between datacenter’s.

very keen to see what we can achieve 🙂

“”Cheers

G